The "Observer Effect" in GitOps: Why Pure Automation Isn't Enough

GitOps promises seamless automation, but when something breaks, the reconciliation process becomes a black box. Here's why visibility matters more than you think.

If you've been running GitOps in production for a while, you've probably hit a familiar wall. On paper, the model is compelling: Git as the source of truth, declarative configuration, continuous reconciliation.

In practice, when something breaks, you often find yourself staring at an OutOfSync status with very little insight into what actually went wrong.

This isn't GitOps failing. It's a visibility gap that's built into how it works.

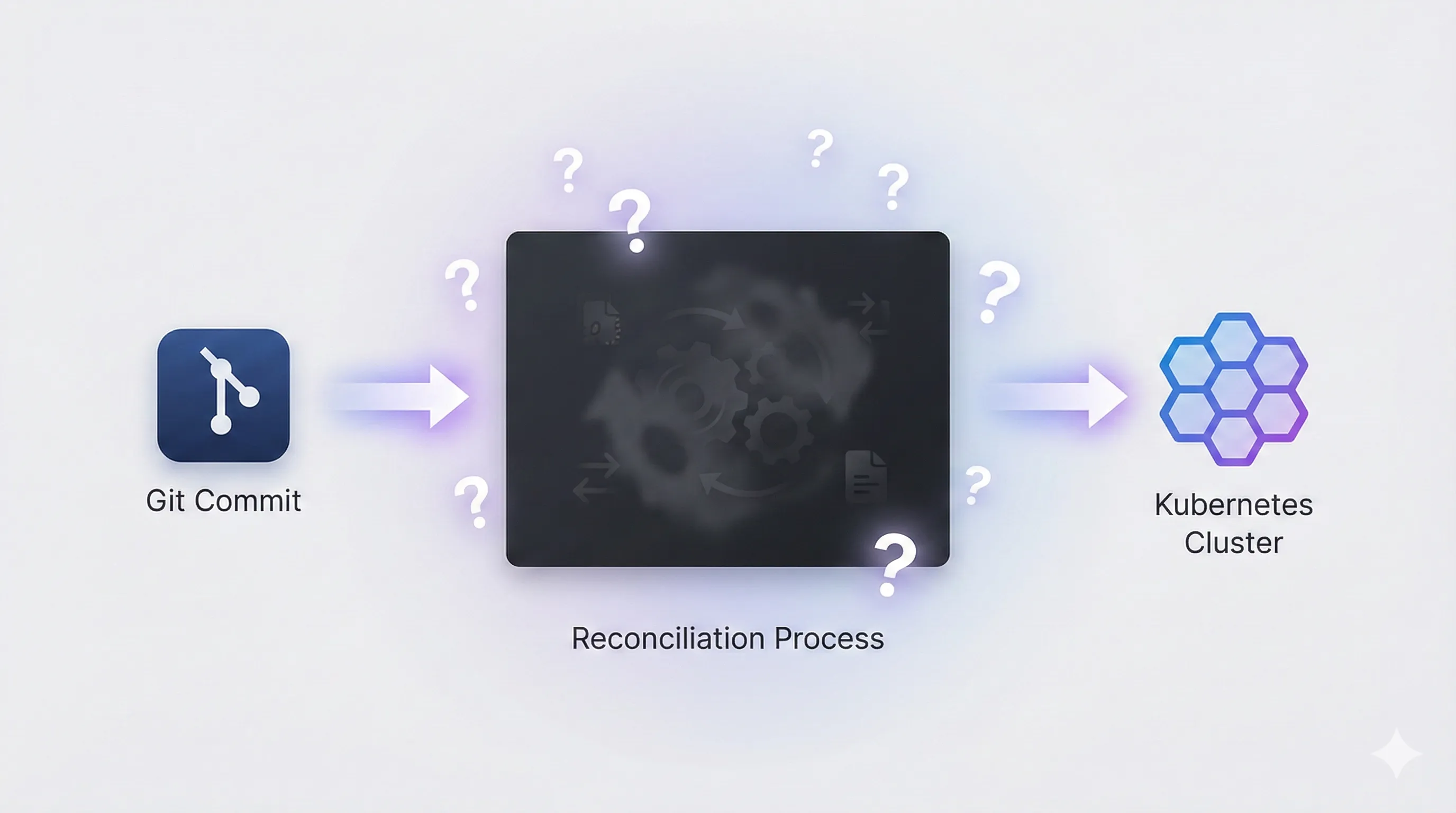

The Black Box Between Commit and Cluster

According to GitOps, your Git repo reflects your cluster state. True enough. But what happens in between?

When you push a commit, the GitOps controller (Flux, Argo CD, etc.) starts a reconciliation process. That process has many steps: detecting the source, rendering manifests, diffing against current state, figuring out the apply order, running hooks, doing health checks, updating status. Sometimes you even have webhook receivers, image automation, or external secrets in the mix.

Most of this is invisible. You see the commit. Later, you see the cluster state change (or not). But the reconciliation itself? Black box.

This matters because when sync fails, you need to know where it failed. Was it rendering? Dependency order? A health check timeout? A secret that couldn't be fetched? Without seeing into the reconciliation process, you cannot really know the root cause.

A Story That Probably Sounds Familiar

It's 2 PM on a Tuesday. You deploy a new feature, nothing fancy, just a straightforward update.

Then you see it: OutOfSync.

Okay, no problem. You check the Git diff. Looks fine. You check the pods. Everything's running. You pull up events and get... vague controller messages that don't quite connect.

So you do what you always do. You start tailing logs. One controller. Then another. Then a third. You're jumping between terminal tabs, squinting at timestamps, trying to stitch together a timeline that makes sense.

Forty-five minutes later, you finally find it: a CRD that needed to be applied before your workload wasn't ready, because it depended on an external secrets store that blipped during a cert rotation half an hour earlier.

The fix? Two minutes. A quick re-sync and done.

But that investigation? That cost you an hour. And probably a bit of your sanity.

The worst part? This isn't some rare edge case. It's just Tuesday.

The Cost of Eventual Consistency

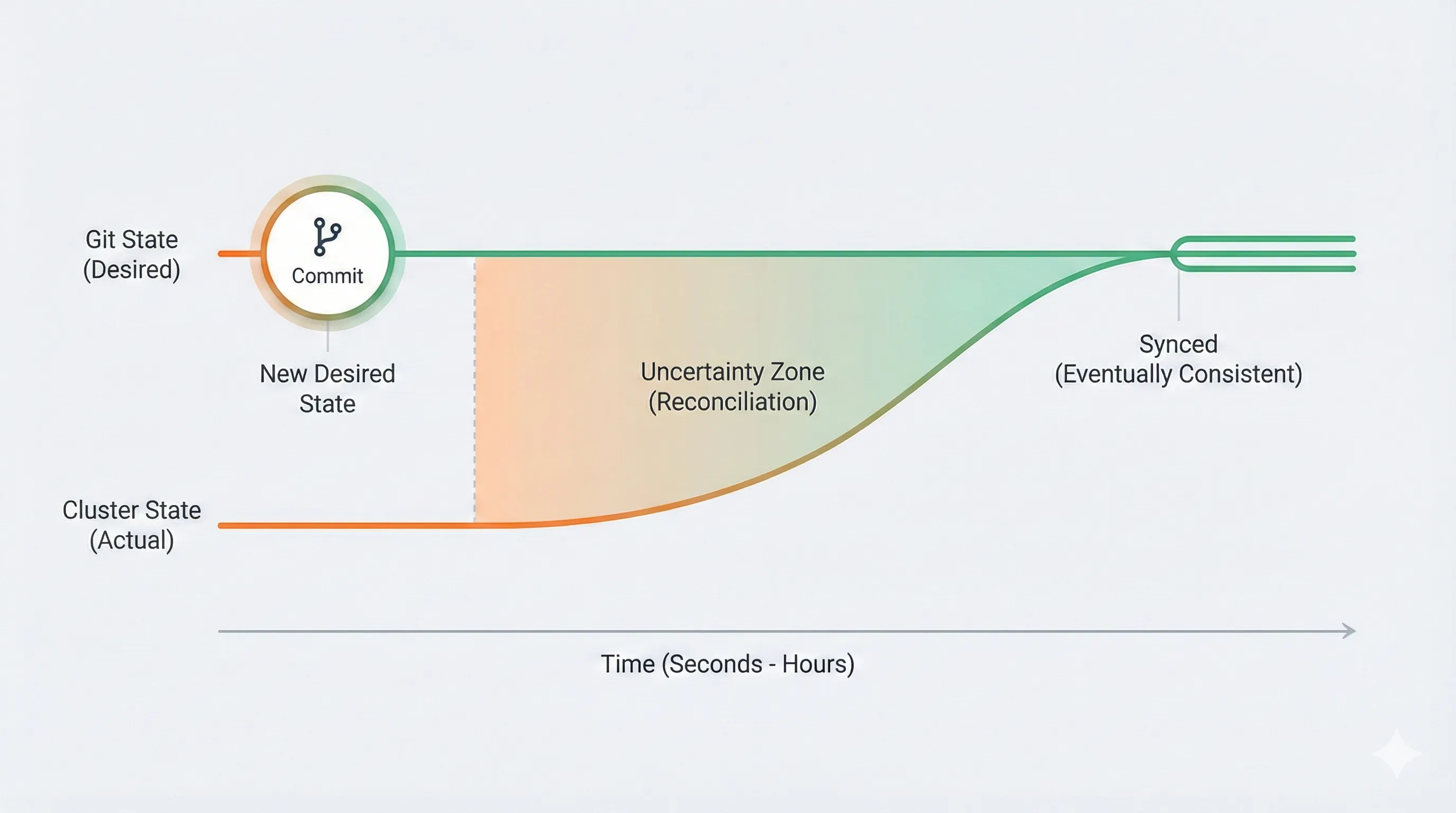

Part of what makes this hard is that GitOps is eventually consistent by design. Your Git state and cluster state will match, eventually. The reconciliation loop will retry, eventually. Health checks will pass, eventually.

This is actually a strength. Eventual consistency makes GitOps resilient. Controllers recover from temporary failures on their own. Networks blip, secrets rotate, nodes restart, and the system heals itself.

But there's a trade-off: you can't assume what's in Git is what's running right now. During that gap, which could be seconds or hours, there's partial blindness.

The longer that gap stays open, the more uncertainty builds up. And uncertainty during an incident costs time and money.

Dashboard Fatigue Is Real

When teams run into this kind of uncertainty, the next instinct is usually to add more monitoring. Add Prometheus metrics. Build Grafana dashboards. Set up alerts for sync failures.

This helps, but only to a certain point. If you've run Kubernetes at any real scale, dashboard fatigue kicks in.

When everything alerts, nothing is really alerting you. It's not unusual for teams to see 2,000+ alerts a week, with only about 3% worth acting on. The rest is noise, temporary blips, self-healing events, low-priority warnings.

The problem isn't missing data, it's missing context. You have plenty of alerts, but they don't tell you enough. "HelmRelease failed to sync" says something broke, but not where in the process, what else it affects, or which commit caused it.

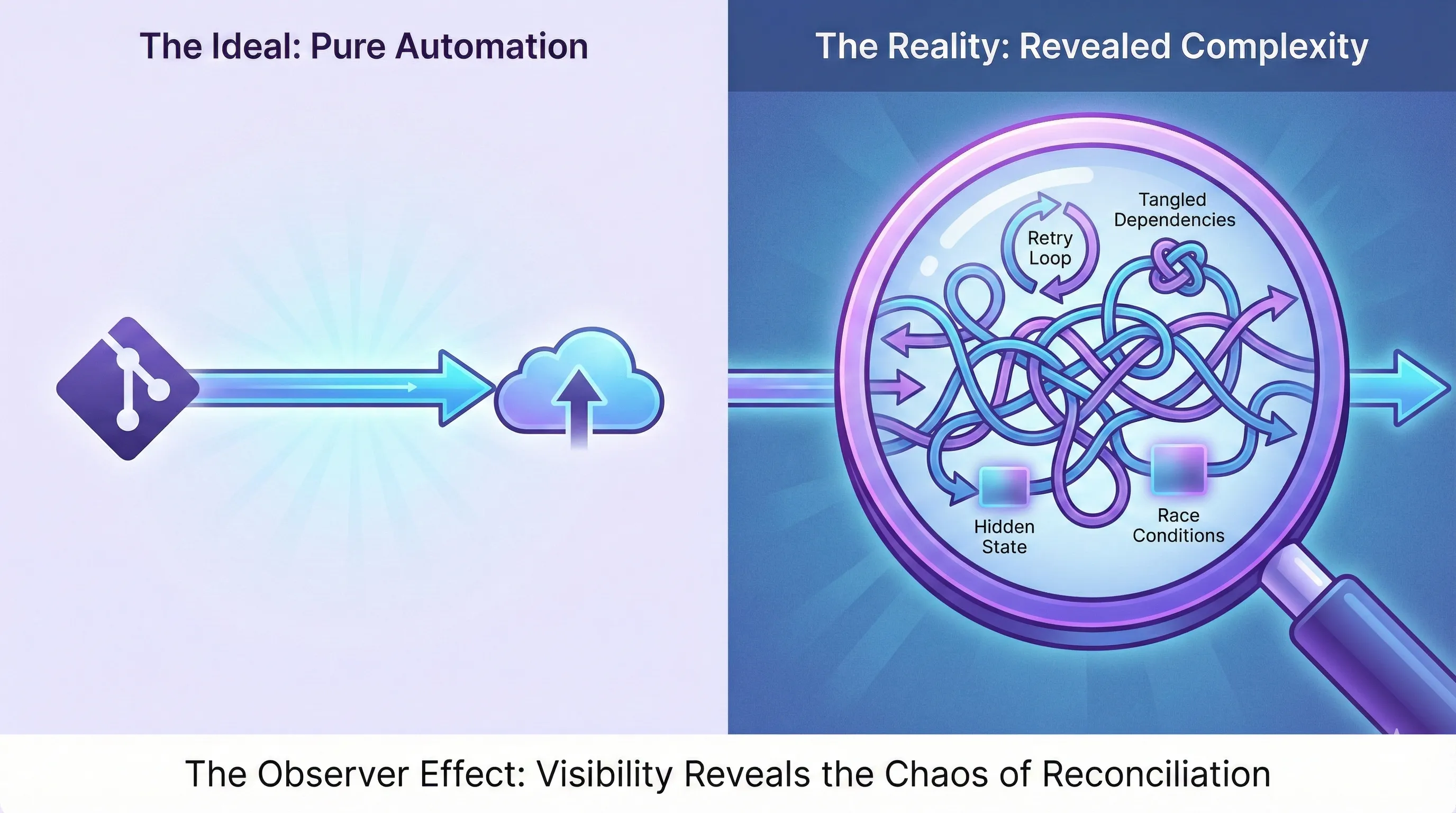

The Observer Effect, Infrastructure Style

This tension between trusting automation and needing to understand it is where a different kind of observer effect shows up in infrastructure.

In physics, the observer effect says that measuring a system changes it. In infrastructure, there's a different version of this.

GitOps is built on the idea that you shouldn't need to watch it. Automation handles things. You set the desired state, the controller makes it happen. In theory, you can walk away.

In practice, you can't walk away from something you don't understand. And when all you can see is the input (Git commits) and output (cluster state), understanding is hard. You see outcomes but not causes.

This creates an odd situation: the more you automate, the more you need to see what the automation is doing. Automation without visibility isn't trustworthy, it's just magic. And magic is hard to debug.

What Good Visibility Looks Like

What could possibly fix it? Not ditching GitOps, that would throw away real progress. The answer is treating reconciliation visibility as something that matters.

Good visibility in GitOps means a few things:

Linking commits to resources. Trace any Kubernetes resource to the exact commit, file, and line that defined it, and vice versa.

Showing reconciliation status. Not just "synced" or "not synced", see where things are stuck: dependencies, health checks, running hooks.

Making ownership clear. When a Deployment fails because a ConfigMap doesn't exist yet, that connection should be obvious.

Giving context, not just alerts. Every alert comes with the controller, Git history, recent changes, and dependencies you need to start debugging.

Connecting different domains. Git, Kubernetes status, and app-level observability (logs, metrics, traces) in one place.

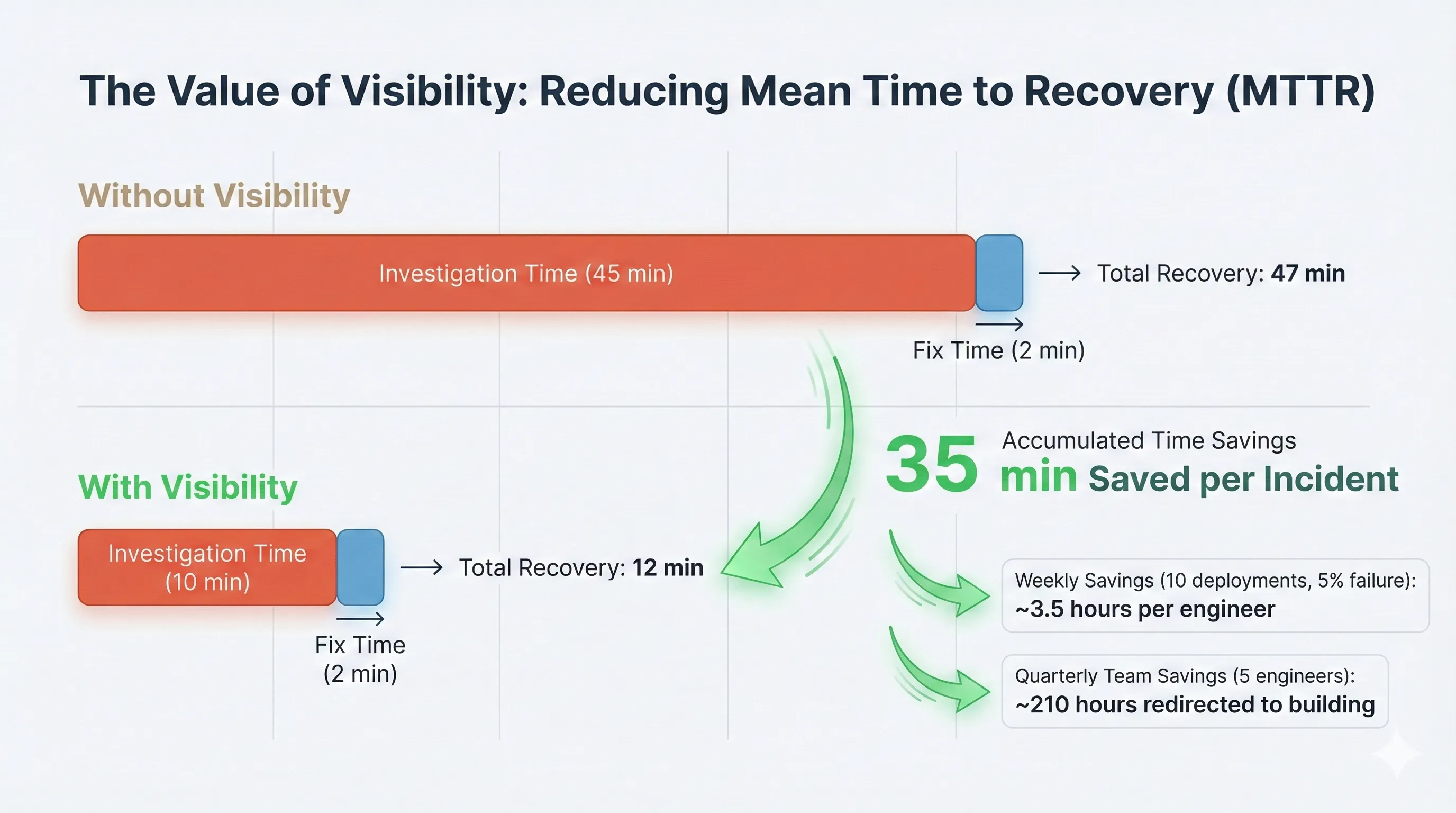

The MTTR Math

Mean Time to Recovery is mostly mean time to understanding. The fix itself rarely takes long, it's a config tweak, a rollback, a restart. What burns time is figuring out what to fix: correlating logs, tracing dependencies, ruling out false leads.

That 45-minute investigation for a 2-minute fix isn't an anomaly. It's the typical ratio.

This matters more in GitOps environments because deployment frequency is higher by design. You're not shipping once a week with a war room on standby, you're pushing incremental changes continuously. That means more opportunities for something to go sideways, and less tolerance for lengthy investigations.

Here's the math: ten deployments a day, 5% incident rate, 45 minutes per investigation. That's roughly 3.5 hours a week spent in reactive mode, per engineer. Cut investigation time to 10 minutes and you reclaim most of that. Multiply across a team of five over a quarter, and you're looking at hundreds of engineering hours redirected from debugging to building.

MTTR improvements don't show up as dramatic wins. They show up as fewer interrupted afternoons, smaller incident backlogs, and teams that can actually sustain high deployment velocity without burning out.

Where Kunobi Fits In

These are the problems we kept running into.

We could see the commit. We could see the cluster. But not what happened in between.

Kunobi makes reconciliation visible again, without replacing Flux or Argo CD. Git stays the source of truth.

We connect Git history, reconciliation state, and live resources. When something is out of sync, you see why.

You still commit to Git, still let controllers reconcile. Now you can see what's happening in that black box.

We're in open beta. If you're tired of grep-ing logs and correlating timestamps, give it a look.

Keywords: GitOps observability, Kubernetes debugging, GitOps visibility, reconciliation monitoring, MTTR reduction, Flux debugging, Argo CD troubleshooting, platform engineering