Stop grep-ing Logs: Debugging Flux Syncs at the Speed of Sight

Kubernetes is hierarchical. Your CLI isn't. It's time to see the graph.

The "Unknown" State

If you run Flux in production, you are likely familiar with this specific reconciliation loop. You push a commit to update a HelmRelease. You wait. You check the status:

flux get kustomizationsAnd you see False or Unknown.

Now the forensics begins. Is the artifact failed? Is the source controller unable to fetch the Helm chart? Or did the chart render fine, but the underlying Deployment is crashing because of a bad config map?

To find out, you usually have to traverse the chain manually:

kubectl describe helmrelease my-app(Check events)kubectl get pods(Find the new pods)kubectl logs <pod-id>(Check application logs)flux logs --level=error(Check controller logs)

The problem isn't the data—the data is there. The problem is that CLI tools flatten hierarchical data. They show you lists, but GitOps is a graph of dependencies.

The Missing Context: OwnerReferences

Kubernetes relies on the metadata.ownerReferences to track relationships. This works perfectly for native objects: a Deployment owns a ReplicaSet, which owns a Pod.

However, GitOps introduces a "logical gap", Flux resources manage dependencies in its own way.

For example, HelmRelease defines your application deployment, but it doesn't strictly "own" the resulting Deployment via a standard K8s reference. Instead, the Flux helm controller use it owns field to manage them.

When you are debugging, you are forced to mentally jump this gap. You have to check the HelmRelease status, then assume the resulting resources were created, and then switch to standard kubectl commands to track the Deployment down to the Pod level.

This is the specific friction Kunobi removes. We automate this traversal by correlating the Flux object, the Helm storage history, and the native Kubernetes ownerReferences into a single, unbroken chain.

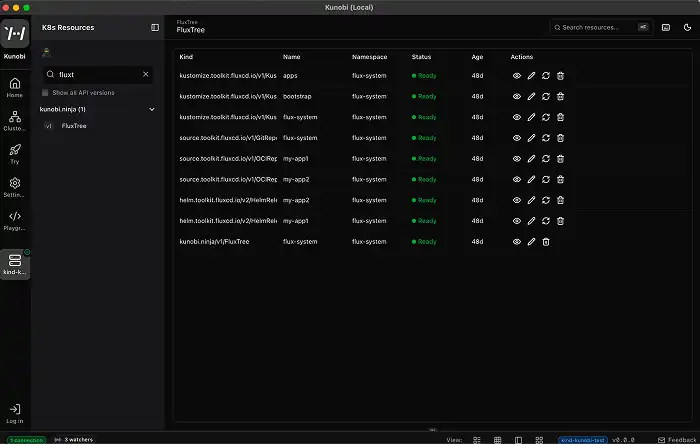

Visualizing the Dependency Graph

In Kunobi, we map these relationships instantly using the standard Kubernetes API. However, because GitOps operates at two levels—the controller level and the runtime level—we visualize this in two distinct stages.

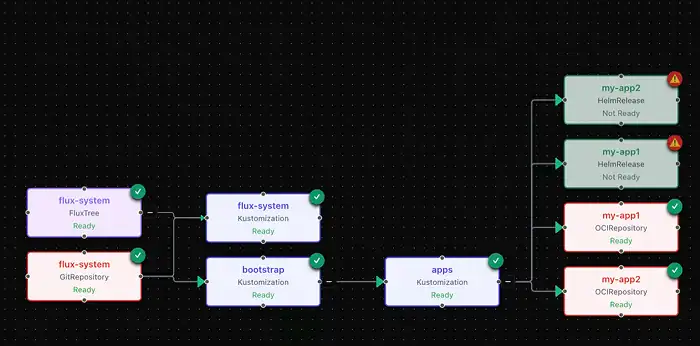

1. The Control Plane View (Flux References)

First, the Flux Tree visualizes the full web of dependencies between Flux resources. We map spec.sourceRef (upstream) and status.inventory (downstream) to show you the complete flow of configuration.

Looking at the view above, we can isolate configuration and sync failures immediately:

- The Source is Healthy: On the left, the

flux-systemGitRepository is green. - The Pipeline is Valid: The middle

appsKustomization is also green. - The Artifact vs. Release Mismatch: On the right, the OCIRepositories are green (artifact fetched), but the HelmReleases are Red.

Diagnosis: This confirms the failure is inside the Helm chart configuration (schema/values), not the network or the git source.

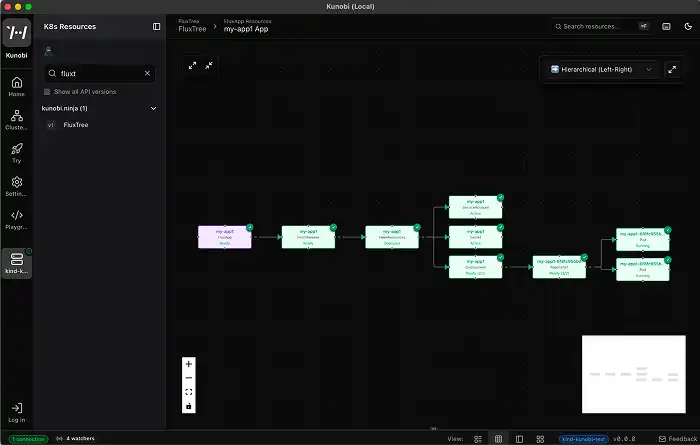

2. The Runtime View (Kubernetes OwnerReferences)

But what if your HelmRelease is Green, but the application is still down?

With Kunobi you can click on any node in the Flux Tree to drill down into the concrete HelmRelease or Kustomization view.

Once inside a specific resource, Kunobi takes you past the Flux boundary. It uses metadata.ownerReferences to traverse the entire downstream chain—from the Helm release, to the Deployment, ReplicaSets, and individual Pods.

This second view captures the runtime reality:

- Bridging the Gap: You see the connection between the Flux

HelmReleaseand the native KubernetesDeployment—a linkkubectldoesn't show explicitly. - Runtime Debugging: If the

Deploymentis green but thePodis red, you know the GitOps sync worked perfectly, but the application is crashing (e.g.,OOMKilledorCrashLoopBackOff).

Pro Tip: Flexible Navigation You don't always have to start at the global tree. These dependency graphs are available directly within individual HelmRelease or Kustomization views. Whether you drill down from the "Big Picture" or look up a specific resource directly, Kunobi provides the same visual context via distinct paths.

Visual Binary Search

The technical value of this view isn't "pretty boxes." It is scoping.

When an alert fires saying my-app2 is degraded, the Flux Tree lets you binary-search the problem immediately:

- Is the Root Node Red? The issue is likely in the Source Controller (Git repo access, artifact fetch).

- Is the Middle Node Red? The issue is likely a schema validation error or a conflict in the Helm Controller.

- Is the Leaf Node Red? The GitOps flow worked perfectly; your application is crashing (

OOMKilled,CrashLoopBackOff).

No "Magic," Just Visibility

It is important to note that Kunobi isn't maintaining an external state database to build this tree. We are reading the live state of your cluster—specifically the metadata.ownerReferences and the status conditions of your CRDs.

This means what you see in the Flux Tree is the exact, real-time reality of your cluster. If you delete a pod via CLI, it disappears from the graph instantly.

We built this because we believe you shouldn't have to be a "Human Kubernetes Parser" to understand why a deployment failed. You should just be able to see it.

Debug Your Own Clusters Locally

You don't need to migrate your stack or install CRDs to get this visibility.

Because Kunobi is a local-first desktop app, it respects your existing ~/.kube/config and RBAC. There are no agents to install in your cluster and no permissions to grant to a SaaS cloud.

You can keep your current GitOps workflow exactly as it is—just clearer.

👉 Download the Beta — load your config and turn your terminal lists into a navigable graph today.

Keywords: Flux UI, GitOps observability, visual Kubernetes debugging, FluxCD dashboard, GitOps troubleshooting, local Kubernetes tools, FluxCD visualization